Piggybacking off of yesterday’s post, there was a rumor earlier in the month that the next wave of iOS apps to come to the Mac are Apple’s very own media apps.

9to5mac.com - Next major macOS version will include standalone Music, Podcasts, and TV apps, Books app gets major redesign | Guilherme Rambo:

Fellow developer Steve Troughton-Smith recently expressed confidence about some evidence found indicating that Apple is working on new Music, Podcasts, and perhaps Books apps for macOS, to join the new TV app.

I’ve been able to independently confirm that this is true. On top of that, I’ve been able to confirm with sources familiar with the development of the next major version of macOS – likely 10.15 – that the system will include standalone Music, Podcasts, and TV apps, but it will also include a major redesign of the Books app. We also got an exclusive look at the icons for the new Podcasts and TV apps on macOS.

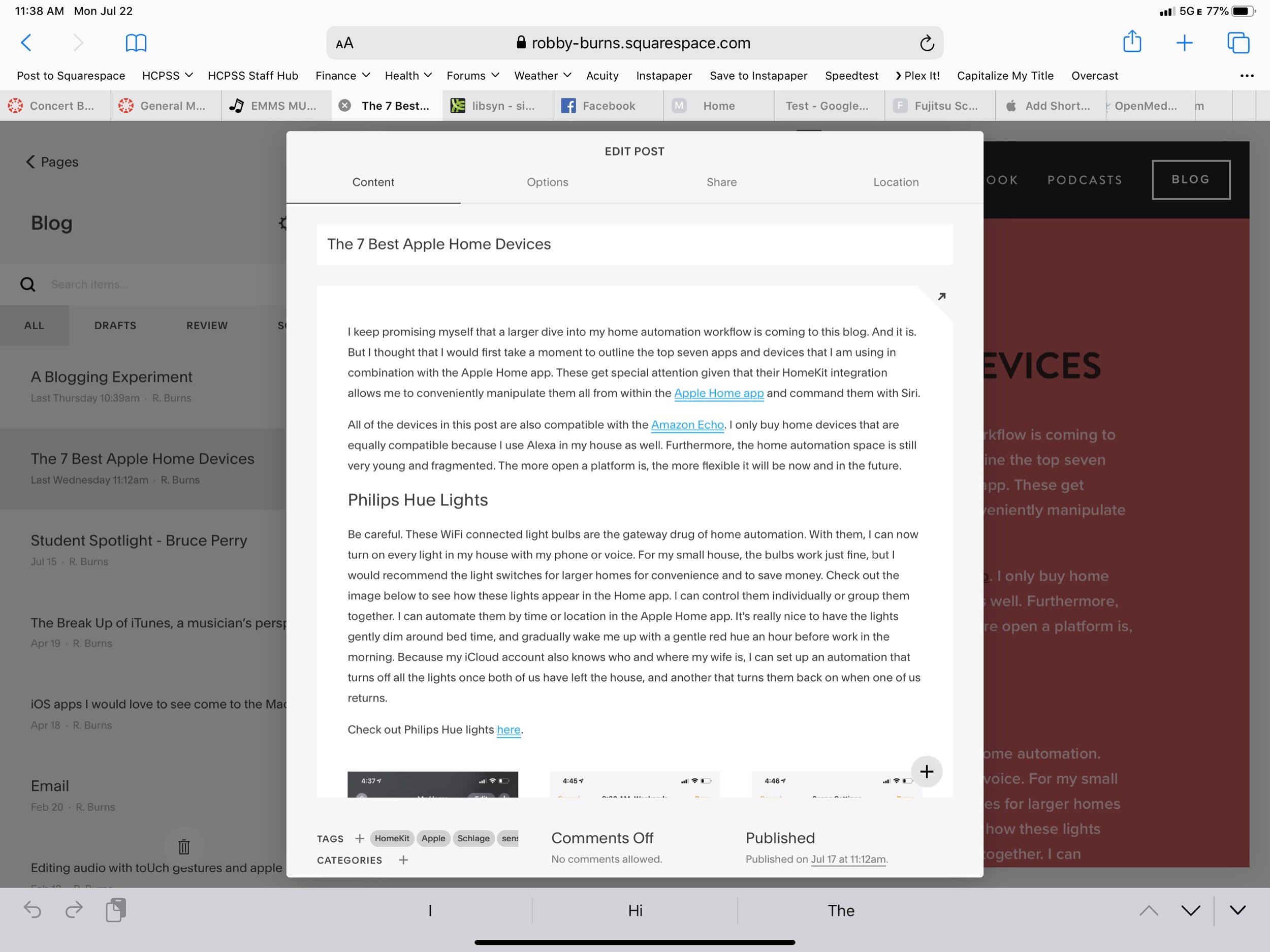

I have been arguing that iTunes should be broken up into separate apps on the Mac for years. As a musician and teacher who is an absolute iTunes power user, and who depends on music library management tools, I thought it was worth digging into the implications of this a little bit.

If you are a podcast listener, and have room in your diet for some shows that discuss Apple technology, there was an astoundingly good conversation about this topic on last week’s episodes of Upgrade and ATP. Both shows discuss not only the implications for the future of iTunes, but for the very nature of the Mac itself.

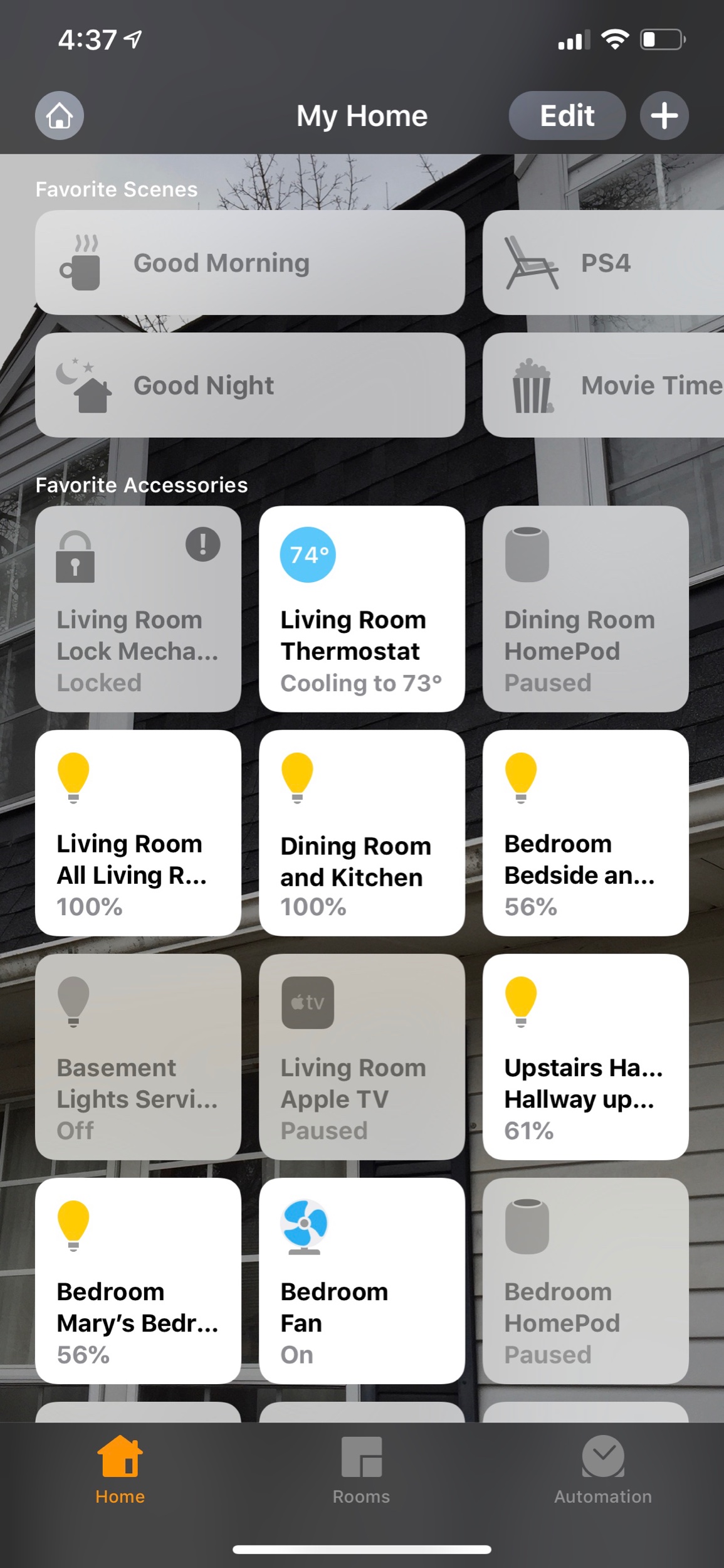

I am so excited for the TV app and the Podcast app to get their own attention. They have been much needed for a long time. I imagine Podcasts will be solid out of the gate. I will kind of miss the current TV app icon on iOS and the Apple TV but I understand that Apple needs to brand it with their logo since it is going to be coming to third party TVs and Amazon Fire products this fall with the launch of their new TV service. I don’t know how a Books app based on the iOS version would work with my imported PDF book library, but it is already wildly inconsistent between iOS and macOS so I cannot imagine it could get any worse.

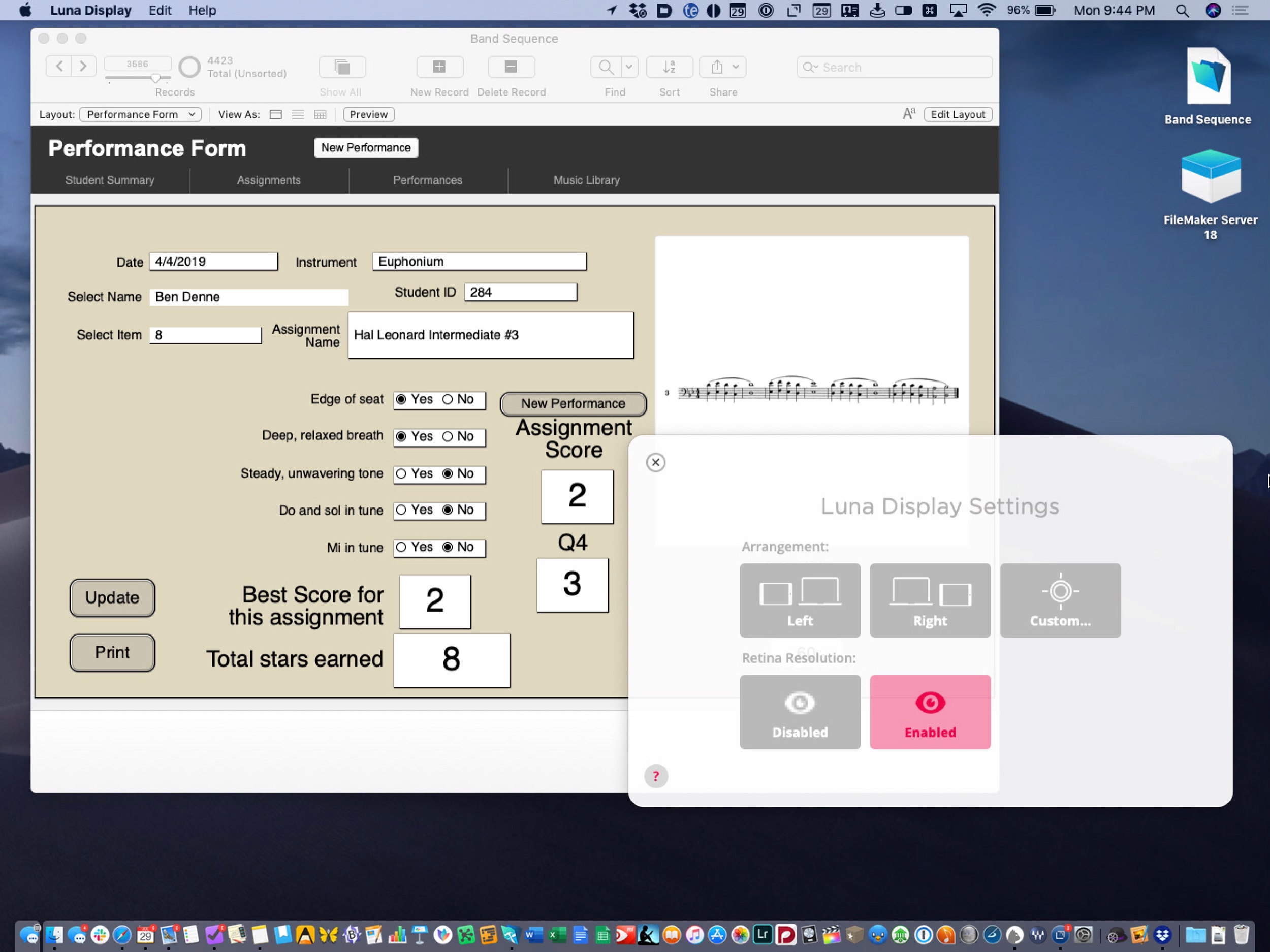

iTunes is a place that I have traditionally relied heavily upon to organize my music library, recordings of my ensemble, and video performances of my concerts. I detail my entire music and video workflows in my book, Digital Organization Tips for Music Teachers.

iTunes is the only app that allows me to store my personal library alongside a streaming music library, and sync it across multiple devices. This is what has set it apart from Spotify for me over the past few years. iTunes also has some great video organization tools. For years now, I have organized all video of my school ensemble’s live performance (amongst numerous other musical performances and home video) into the video section of iTunes, and then pointed a Plex server towards the folder of files so that I can stream them from my Apple TV and iOS devices on the go.

If the new Music and TV apps are just like their iOS counterparts, there are a whole lot of features I depend on that could potentially get ditched. Here are a few of them...

-Importing my own music. The iOS version of music can’t even import a song. That’s right! If I buy an album on Bandcamp, or take an audio file of a professional band performing a tune my ensemble is working on, I can drag them right into iTunes on my Mac, and they will sync to my mobile devices over Apple Music Library. I would imagine Apple has to have at least figured this one out for iOS if they are going to ship this app on the Mac in the fall.

-Metadata control. It would be a sad day if I could not press the info button on a song, add my own comments, rating, and adjustments to the title, album name, etc.

-Smart Playlists. Jazz and classical recordings are notoriously difficult to manage in iTunes because of how complex their metadata is. In addition to editing artist and album information in these recordings, I have spent some time adding extra info to the comments section of my songs and then creating smart playlists to filter them. If Miles Davis is tagged in every recording he sat in on, you can make playlists like ‘Songs Miles Soloed On Between 1961-75.’

-Adding video. QuickTime (much like Preview) is an app that exists only on the Mac, because it is natively built into iOS whenever you tap a media file (or PDF in the case of Preview). Apple never had a dedicated app for managing video (although there is the awkward iMovie Library feature which has an arbitrary file limit). That said, iTunes is a pretty great utility for this purpose. I would hate to loose its video management features, even though they were never on iOS to begin with. The TV app is looking more and more like it is built to fulfill Apple’s TV strategy, which is to aggregate as much TV and Movie content from as many providers as possible, into a unified entertainment service. Don’t get my wrong, I am excited, I just don’t see myself using it to organize recordings of my band concerts.

Presumably iTunes isn’t going anywhere any time soon. For these legacy features, and including the need to sync older iOS devices to a Mac, I imagine it will still come on the Mac, buried in the Utilities folder, for years to come. Hopefully, users will still be able to do actions like I listed above in iTunes, and enjoy the benefit of them in the new Music app.

In conclusion, I remain highly cynical about this transition because Apple does not seem interested in making good apps in recent years. Conversely, I am enthusiastic about the long term benefit. If Apple developers are writing code for just one version of their apps instead of two, it is more likely that iOS versions of software will get elevated. That is exciting, even if it means that the Mac apps cannot do all of the same stuff they could always do at first. Coupled with rumors that Apple is going to release an ARM based Mac in the near future, I would like to believe that years down the road, we will be getting closer to a shared app platform between all Apple devices, with feature parity, and less distinction between input devices and which hardware its running on.